从JDK1.2起,就有了HashMap,但是HashMap不是线程安全的,因此多线程操作时需要格外小心。在JDK1.5中,伟大的Doug Lea给我们带来了concurrent包,从此Map也有安全的了,那就是ConcurrentHashMap。那ConcurrentHashMap如何实现线程安全同时高并发的的操作的原理的呢?

ConcurrentHashMap 1.7

存储结构

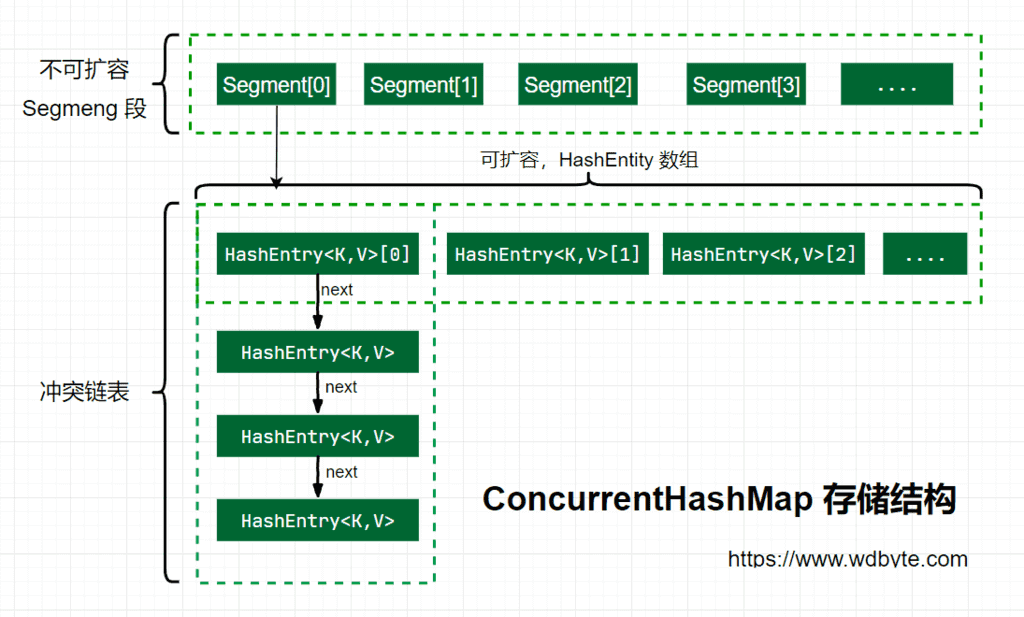

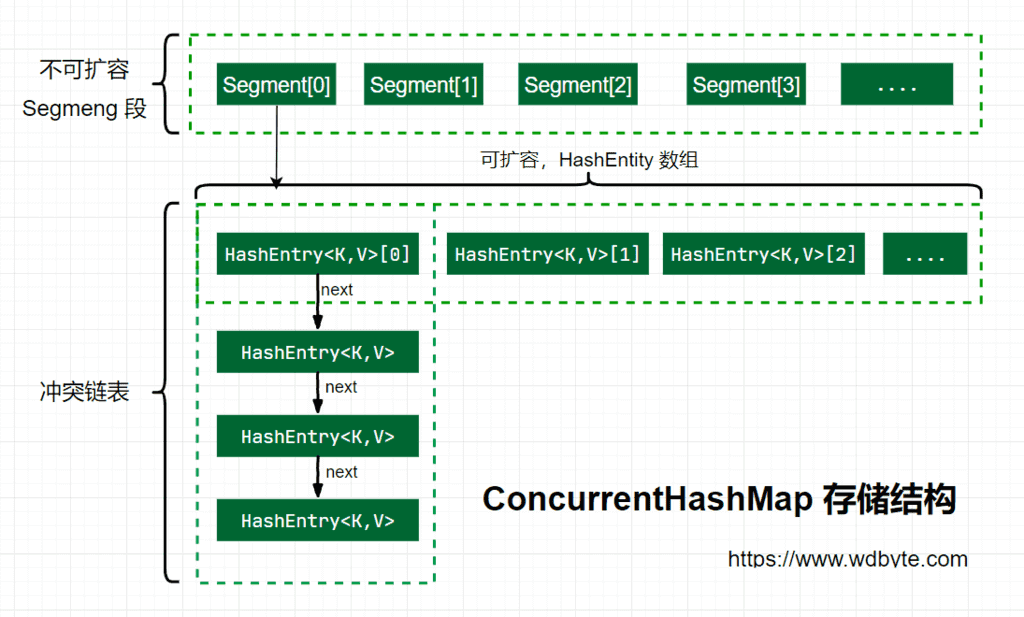

Java 7 中 ConcurrentHashMap的存储结构是有很多个Segment组合,每个Segment又类似于HashMap结构;所以每个HashMap可以进行扩容,但是Segment的个数一旦初始化就不能更改,默认Segment的个数是16个,也就可以认为Java7 中 ConcurrentHashMap默认支持最多16个线程并发。

初始化

从ConcurrentHashMap无参构造方法探寻初始化流程:

1

2

3

4

5

6

7

|

/**

* Creates a new, empty map with a default initial capacity (16),

* load factor (0.75) and concurrencyLevel (16).

*/

public ConcurrentHashMap() {

this(DEFAULT_INITIAL_CAPACITY, DEFAULT_LOAD_FACTOR, DEFAULT_CONCURRENCY_LEVEL);

}

|

无参构造方法中传入三个默认值:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

/**

* The default initial capacity for this table,

* used when not otherwise specified in a constructor.

*/

static final int DEFAULT_INITIAL_CAPACITY = 16;

/**

* The default load factor for this table, used when not

* otherwise specified in a constructor.

*/

static final float DEFAULT_LOAD_FACTOR = 0.75f;

/**

* The default concurrency level for this table, used when not

* otherwise specified in a constructor.

*/

static final int DEFAULT_CONCURRENCY_LEVEL = 16;

|

接下来看内部实现逻辑:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

|

/**

* Creates a new, empty map with the specified initial

* capacity, load factor and concurrency level.

*

* @param initialCapacity the initial capacity. The implementation

* performs internal sizing to accommodate this many elements.

* @param loadFactor the load factor threshold, used to control resizing.

* Resizing may be performed when the average number of elements per

* bin exceeds this threshold.

* @param concurrencyLevel the estimated number of concurrently

* updating threads. The implementation performs internal sizing

* to try to accommodate this many threads.

* @throws IllegalArgumentException if the initial capacity is

* negative or the load factor or concurrencyLevel are

* nonpositive.

*/

@SuppressWarnings("unchecked")

public ConcurrentHashMap(int initialCapacity,

float loadFactor, int concurrencyLevel) {

if (!(loadFactor > 0) || initialCapacity < 0 || concurrencyLevel <= 0)

throw new IllegalArgumentException();

// 校验并发级别大小,大于 1<<16,重置为 1<<16,因为64linux操作系统最大线程数为65535(1<<16),设置再大反而会资源

if (concurrencyLevel > MAX_SEGMENTS)

concurrencyLevel = MAX_SEGMENTS;

// Find power-of-two sizes best matching arguments

int sshift = 0;

int ssize = 1;

//找到concurrencyLevel之上最近的2的次方(sshift)以及次方值(ssize)

while (ssize < concurrencyLevel) {

++sshift;

ssize <<= 1;

}

//标记segment 偏移量

this.segmentShift = 32 - sshift;

//segment 掩码

this.segmentMask = ssize - 1;

//整个map的初始化容量

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

//容量/ssize,默认16/16=1,计算出每个segment中中HashEntity容量

int c = initialCapacity / ssize;

if (c * ssize < initialCapacity)

++c;

//The minimum capacity for per-segment tables. Must be a power of two,

//at least two to avoid immediate resizing on next use after lazy construction.

//每段表的最小容量。必须是二的幂,至少是二的幂,以避免在构建之后在下次使用时立即调整大小。

int cap = MIN_SEGMENT_TABLE_CAPACITY;

while (cap < c)

cap <<= 1;

// create segments and segments[0]

// 创建 Segment 数组 和 segments[0]

Segment<K,V> s0 =

new Segment<K,V>(loadFactor, (int)(cap * loadFactor),

(HashEntry<K,V>[])new HashEntry[cap]);

Segment<K,V>[] ss = (Segment<K,V>[])new Segment[ssize];

//实际是用了C中的volatile关键字只能保证可见性不能保证有序性

UNSAFE.putOrderedObject(ss, SBASE, s0); // ordered write of segments[0]

this.segments = ss;

}

|

初始化中主要做了:

- 参数校验

- 校验并发级别concurrencyLevel,不能超过操作系统最大值,默认值是16(提示在优化的时候该参数应该考虑操作系统允许的最大值)。

- 寻找并发级别concurrencyLevel之上最近的2的幂次方的值,作为初始化segment容量大小,默认值是16。

- 记录 segmentShift 偏移量,这个值为【容量 = 2 的N次方】中的 N,在后面 Put 时计算位置时会用到。默认是 32 - sshift = 28。

- 记录segmentMask,默认值是ssize - 1 = 16 -1 = 15。

- 初始化segment[0],默认大小为2,负载因子0.75,扩容扩容阈值2*0.75=1.5,插入第二个值时候扩容。

put

然后Put 方法源码:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

|

/**

* Maps the specified key to the specified value in this table.

* Neither the key nor the value can be null.

*

* <p> The value can be retrieved by calling the <tt>get</tt> method

* with a key that is equal to the original key.

*

* @param key key with which the specified value is to be associated

* @param value value to be associated with the specified key

* @return the previous value associated with <tt>key</tt>, or

* <tt>null</tt> if there was no mapping for <tt>key</tt>

* @throws NullPointerException if the specified key or value is null

*/

@SuppressWarnings("unchecked")

public V put(K key, V value) {

Segment<K,V> s;

//vaule不能为null,否则NPE,区别于HashMap

if (value == null)

throw new NullPointerException();

int hash = hash(key);

// hash 值无符号右移 28位(segmentShift 初始化时获得),然后与 segmentMask=15 做与运算

// 其实也就是把高4位与segmentMask(1111)做与运算

int j = (hash >>> segmentShift) & segmentMask;

if ((s = (Segment<K,V>)UNSAFE.getObject // nonvolatile; recheck

(segments, (j << SSHIFT) + SBASE)) == null) // in ensureSegment

s = ensureSegment(j);

return s.put(key, hash, value, false);

}

/**

* Returns the segment for the given index, creating it and

* recording in segment table (via CAS) if not already present.

*

* @param k the index

* @return the segment

*/

@SuppressWarnings("unchecked")

private Segment<K,V> ensureSegment(int k) {

final Segment<K,V>[] ss = this.segments;

long u = (k << SSHIFT) + SBASE; // raw offset

Segment<K,V> seg;

// 判断 u 位置的 Segment 是否为null

if ((seg = (Segment<K,V>)UNSAFE.getObjectVolatile(ss, u)) == null) {

Segment<K,V> proto = ss[0]; // use segment 0 as prototype

// 获取0号 segment 里的 HashEntry<K,V> 长度

int cap = proto.table.length;

// 获取0号 segment 里的 hash 表里的扩容负载因子,所有的 segment 的 loadFactor 是相同的

float lf = proto.loadFactor;

// 计算扩容阀值

int threshold = (int)(cap * lf);

// 创建一个 cap 容量的 HashEntry 数组

HashEntry<K,V>[] tab = (HashEntry<K,V>[])new HashEntry[cap];

// 再次检查 u 位置的 Segment 是否为null,因为这时可能有其他线程进行了操作

if ((seg = (Segment<K,V>)UNSAFE.getObjectVolatile(ss, u))

== null) { // recheck

Segment<K,V> s = new Segment<K,V>(lf, threshold, tab);

// 自旋检查 u 位置的 Segment 是否为null

while ((seg = (Segment<K,V>)UNSAFE.getObjectVolatile(ss, u))

== null) {

// 使用CAS 赋值,只会成功一次

if (UNSAFE.compareAndSwapObject(ss, u, null, seg = s))

break;

}

}

}

return seg;

}

|

上面Put的流程分析:

上面代码主要是获取segment以及初始化segment,接下来就是往segment中插入key,value:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

|

final V put(K key, int hash, V value, boolean onlyIfAbsent) {

// 获取 ReentrantLock 独占锁,获取不到,scanAndLockForPut 获取node对象。

HashEntry<K,V> node = tryLock() ? null :

//尝试获取锁时,扫描包含给定密钥的节点,如果未找到,则创建并返回一个锁。

scanAndLockForPut(key, hash, value);

V oldValue;

try {

HashEntry<K,V>[] tab = table;

// 计算要put的数据位置

int index = (tab.length - 1) & hash;

// volitile特性 获取 index 坐标的值,防止其它线程在修改过没有同步

HashEntry<K,V> first = entryAt(tab, index);

for (HashEntry<K,V> e = first;;) {

if (e != null) {

// 检查是否 key 已经存在,如果存在,则遍历链表寻找位置,找到后替换 value

K k;

// 判断key相等的条件

if ((k = e.key) == key ||

(e.hash == hash && key.equals(k))) {

oldValue = e.value;

if (!onlyIfAbsent) {

e.value = value;

++modCount;

}

break;

}

e = e.next;

}

else {

//?node节点不为空,插入链表时候是头插法,设置first为next;

if (node != null)

node.setNext(first);

else

node = new HashEntry<K,V>(hash, key, value, first);

int c = count + 1;

// 容量大于扩容阀值,小于最大容量,进行扩容

if (c > threshold && tab.length < MAXIMUM_CAPACITY)

rehash(node);

else

// index 位置赋值 node,node 可能是一个元素,也可能是一个链表的表头

setEntryAt(tab, index, node);

++modCount;

count = c;

oldValue = null;

break;

}

}

} finally {

unlock();

}

return oldValue;

}

|

Segment继承了ReentranLock,所以Segment内部直接可以获取锁:

-

如果获取不到,使用scanAndLockForPut方法继续获取;

-

计算put位置的index,然后获取这个位置的HashEntry;

-

如果有值,遍历链表,put新元素;

- 判断当前节点key是否等于要put的key,一致则替换;

- 不一致继续下一个节点,直到替换或者遍历完成,继续下面

该位置没有值逻辑;

-

如果该位置没有值:

-

判断是否大于扩容阈值,小于最大容量,进行扩容。

-

直接头插法直接插入。

-

如果该位置有值,替换后返回旧值,否则返回null。

第一步scanAndLockForPut 的操作就是不断自旋trylock()获取锁,如果自旋次数大于指定次数,使用lock()阻塞获取锁。在自旋时顺表获取下 hash 位置的 HashEntry。为后面代码预热。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

private HashEntry<K,V> scanAndLockForPut(K key, int hash, V value) {

HashEntry<K,V> first = entryForHash(this, hash);

HashEntry<K,V> e = first;

HashEntry<K,V> node = null;

int retries = -1; // negative while locating node

//自旋获取锁

while (!tryLock()) {

HashEntry<K,V> f; // to recheck first below

if (retries < 0) {

if (e == null) {

if (node == null) // speculatively create node

node = new HashEntry<K,V>(hash, key, value, null);

retries = 0;

}

else if (key.equals(e.key))

retries = 0;

else

e = e.next;

}

else if (++retries > MAX_SCAN_RETRIES) {

// 自旋达到指定次数后,阻塞等到只到获取到锁

lock();

break;

}

else if ((retries & 1) == 0 &&

(f = entryForHash(this, hash)) != first) {

e = first = f; // re-traverse if entry changed

retries = -1;

}

}

return node;

}

|

扩容

ConcurrentHashMap扩容,只会扩容到原来的两倍。老数组的数据移动到新的数组时,位置要么不变,要么index+oldSize,参数里node会在扩容之后使用链表头插法,插入到指定位置。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

|

/**

* Doubles size of table and repacks entries, also adding the

* given node to new table

*/

@SuppressWarnings("unchecked")

private void rehash(HashEntry<K,V> node) {

/*

* Reclassify nodes in each list to new table. Because we

* are using power-of-two expansion, the elements from

* each bin must either stay at same index, or move with a

* power of two offset. We eliminate unnecessary node

* creation by catching cases where old nodes can be

* reused because their next fields won't change.

* Statistically, at the default threshold, only about

* one-sixth of them need cloning when a table

* doubles. The nodes they replace will be garbage

* collectable as soon as they are no longer referenced by

* any reader thread that may be in the midst of

* concurrently traversing table. Entry accesses use plain

* array indexing because they are followed by volatile

* table write.

*/

HashEntry<K,V>[] oldTable = table;

int oldCapacity = oldTable.length;

// 新容量,扩大一倍

int newCapacity = oldCapacity << 1;

// 新扩容阈值

threshold = (int)(newCapacity * loadFactor);

//新数组

HashEntry<K,V>[] newTable =

(HashEntry<K,V>[]) new HashEntry[newCapacity];

//新的掩码,默认是2,扩容是4,减一是3,二进制就是11

int sizeMask = newCapacity - 1;

for (int i = 0; i < oldCapacity ; i++) {

//遍历老数组

HashEntry<K,V> e = oldTable[i];

if (e != null) {

HashEntry<K,V> next = e.next;

//计算新的位置,新的位置只可能是不变,或者老的位置加老的容量

int idx = e.hash & sizeMask;

if (next == null) // Single node on list

newTable[idx] = e;

else { // Reuse consecutive sequence at same slot

//如果是链表

HashEntry<K,V> lastRun = e;

int lastIdx = idx;

//新的位置只可能是不变,或者老的位置加老的容量

//遍历结束后,lastRun后面的元素位置都是相同的

for (HashEntry<K,V> last = next;

last != null;

last = last.next) {

int k = last.hash & sizeMask;

if (k != lastIdx) {

lastIdx = k;

lastRun = last;

}

}

//lastRun 后面元素位置都相同,直接作为链表赋值到新的位置

newTable[lastIdx] = lastRun;

// Clone remaining nodes

for (HashEntry<K,V> p = e; p != lastRun; p = p.next) {

// 遍历剩余元素,头插法到指定 k 位置。

V v = p.value;

int h = p.hash;

int k = h & sizeMask;

HashEntry<K,V> n = newTable[k];

newTable[k] = new HashEntry<K,V>(h, p.key, v, n);

}

}

}

}

//头插法,插入新节点

int nodeIndex = node.hash & sizeMask; // add the new node

node.setNext(newTable[nodeIndex]);

newTable[nodeIndex] = node;

table = newTable;

}

|

最后两个for有疑问,根据解释:第一个for是为了寻找这样一个节点:后面所有的next节点的新位置都是相同的。然后然后把这个作为一个链表赋值到新的位置。第二个for循环是为了吧剩余的元素通过头插法,插入指定位置。这个不太理解?

get

gat方法比较简单:

- 计算得到key的存放位置

- 遍历查找指定位置相同key的value

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

/**

* Returns the value to which the specified key is mapped,

* or {@code null} if this map contains no mapping for the key.

*

* <p>More formally, if this map contains a mapping from a key

* {@code k} to a value {@code v} such that {@code key.equals(k)},

* then this method returns {@code v}; otherwise it returns

* {@code null}. (There can be at most one such mapping.)

*

* @throws NullPointerException if the specified key is null

*/

public V get(Object key) {

Segment<K,V> s; // manually integrate access methods to reduce overhead

HashEntry<K,V>[] tab;

int h = hash(key);

long u = (((h >>> segmentShift) & segmentMask) << SSHIFT) + SBASE;

if ((s = (Segment<K,V>)UNSAFE.getObjectVolatile(segments, u)) != null &&

(tab = s.table) != null) {

for (HashEntry<K,V> e = (HashEntry<K,V>) UNSAFE.getObjectVolatile

(tab, ((long)(((tab.length - 1) & h)) << TSHIFT) + TBASE);

e != null; e = e.next) {

K k;

if ((k = e.key) == key || (e.hash == h && key.equals(k)))

return e.value;

}

}

return null;

}

|

ConcurrentHashMap 1.8

存储结构

ConcurrentHashMap相对java7来说变化比较大,不再试之前的Segment数组 + HashEntry数组 + 链表,而是Node数组 + 链表/红黑树。

初始化

Java8默认初始化方法什么也没做,默认table size 是16:

1

2

3

4

5

|

/**

* Creates a new, empty map with the default initial table size (16).

*/

public ConcurrentHashMap() {

}

|

那初始化方法就是在put(key,value)时,调用了 putVal方法,在putVal方法中如果table(Node数组)为空则会调用initTable()方法:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

/**

* Initializes table, using the size recorded in sizeCtl.

*/

private final Node<K,V>[] initTable() {

Node<K,V>[] tab; int sc;

while ((tab = table) == null || tab.length == 0) {

//如果sizeCtl小于0,说明其它线程CAS成功,正在进行初始化。

if ((sc = sizeCtl) < 0)

让出cpu使用权

Thread.yield(); // lost initialization race; just spin

else if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

try {

if ((tab = table) == null || tab.length == 0) {

int n = (sc > 0) ? sc : DEFAULT_CAPACITY;

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

table = tab = nt;

sc = n - (n >>> 2);

}

} finally {

sizeCtl = sc;

}

break;

}

}

return tab;

}

|

可以看出ConcurrentHashMap初始化是通过自旋CAS操作完成的。注意变量sizeCtl,它的值决定着当前初始化状态。

- -1说明正在初始化

- -n说明有n-1个线程正在进行扩容

- 表示table的的初始化大小,如果table没有初始化

- 表示table的容量,如果table已经初始化

put

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

|

/**

* Maps the specified key to the specified value in this table.

* Neither the key nor the value can be null.

*

* <p>The value can be retrieved by calling the {@code get} method

* with a key that is equal to the original key.

*

* @param key key with which the specified value is to be associated

* @param value value to be associated with the specified key

* @return the previous value associated with {@code key}, or

* {@code null} if there was no mapping for {@code key}

* @throws NullPointerException if the specified key or value is null

*/

public V put(K key, V value) {

return putVal(key, value, false);

}

/** Implementation for put and putIfAbsent */

final V putVal(K key, V value, boolean onlyIfAbsent) {

//区别HashMap,key,value都不能为null否则PNE

if (key == null || value == null) throw new NullPointerException();

int hash = spread(key.hashCode());

int binCount = 0;

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

if (tab == null || (n = tab.length) == 0)

//如果table(node数组)为空,则初始化(自旋+CAS)

tab = initTable();

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

桶为null,cas放入,不加锁,成功了直接break跳出

if (casTabAt(tab, i, null,

new Node<K,V>(hash, key, value, null)))

break; // no lock when adding to empty bin

}

else if ((fh = f.hash) == MOVED)

tab = helpTransfer(tab, f);

else {

V oldVal = null;

//使用synchronised 加锁加入节点

synchronized (f) {

if (tabAt(tab, i) == f) {

//说明是链表?

if (fh >= 0) {

binCount = 1;

循环加入节点,或者覆盖节点

for (Node<K,V> e = f;; ++binCount) {

K ek;

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

oldVal = e.val;

if (!onlyIfAbsent)

e.val = value;

break;

}

Node<K,V> pred = e;

if ((e = e.next) == null) {

pred.next = new Node<K,V>(hash, key,

value, null);

break;

}

}

}

else if (f instanceof TreeBin) {

//如果是红黑树

Node<K,V> p;

binCount = 2;

//从树中找到该节点

if ((p = ((TreeBin<K,V>)f).putTreeVal(hash, key,

value)) != null) {

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

if (binCount != 0) {

if (binCount >= TREEIFY_THRESHOLD)

treeifyBin(tab, i);

if (oldVal != null)

return oldVal;

break;

}

}

}

addCount(1L, binCount);

return null;

}

|

- 根据key计算出hashcode

- 判断是否需要初始化

- 即为当前 key 定位出的 Node,如果为空表示当前位置可以写入数据,利用 CAS 尝试写入,失败则自旋保证成功

- 如果当前位置的 hashcode == MOVED == -1,则需要进行扩容

- 如果都不满足则利用sync锁写入数据

- 如果数量大于TREEIFY_THRESHOLD这要执行树化方法,在treeifyBin中会首先判断当前数组长度≥64时才会将链表转换为红黑树。

get

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

/**

* Returns the value to which the specified key is mapped,

* or {@code null} if this map contains no mapping for the key.

*

* <p>More formally, if this map contains a mapping from a key

* {@code k} to a value {@code v} such that {@code key.equals(k)},

* then this method returns {@code v}; otherwise it returns

* {@code null}. (There can be at most one such mapping.)

*

* @throws NullPointerException if the specified key is null

*/

public V get(Object key) {

Node<K,V>[] tab; Node<K,V> e, p; int n, eh; K ek;

//key 所在的hash位置

int h = spread(key.hashCode());

if ((tab = table) != null && (n = tab.length) > 0 &&

(e = tabAt(tab, (n - 1) & h)) != null) {

//如果指定位置元素存在,头结点hash值相同

if ((eh = e.hash) == h) {

if ((ek = e.key) == key || (ek != null && key.equals(ek)))

//如果key相等,直接返回value

return e.val;

}

else if (eh < 0)

//如果头结点hash值小于0,说明正在扩容或者是红黑树,find查找

return (p = e.find(h, key)) != null ? p.val : null;

while ((e = e.next) != null) {

//如果是链表,遍历查找

if (e.hash == h &&

((ek = e.key) == key || (ek != null && key.equals(ek))))

return e.val;

}

}

return null;

}

|

总结一下 get 过程:

- 根据 hash 值计算位置。

- 查找到指定位置,如果头节点就是要找的,直接返回它的 value.

- 如果头节点 hash 值小于 0 ,说明正在扩容或者是红黑树,查找之。

- 如果是链表,遍历查找之。

总结

Java7中ConcurrentHashMap使用的分段锁,也就是每一个Segment上同时只有一个线程可以操作,每个Segment都是一个类似HashMap的数组结构,可以扩容,冲突会转化为链表。但是Segment一旦初始化,个数不能变。

Java8中ConcurrentHashMap采用Synchronized锁加CAS的机制,结构变成Node数组+链表/红黑树,Node是一个类似于HashEntry的结构。冲突后会转化为红黑树,冲突小于一定数量会退化为链表。

Synchronized锁引入锁升级策略后,性能也不再是问题。